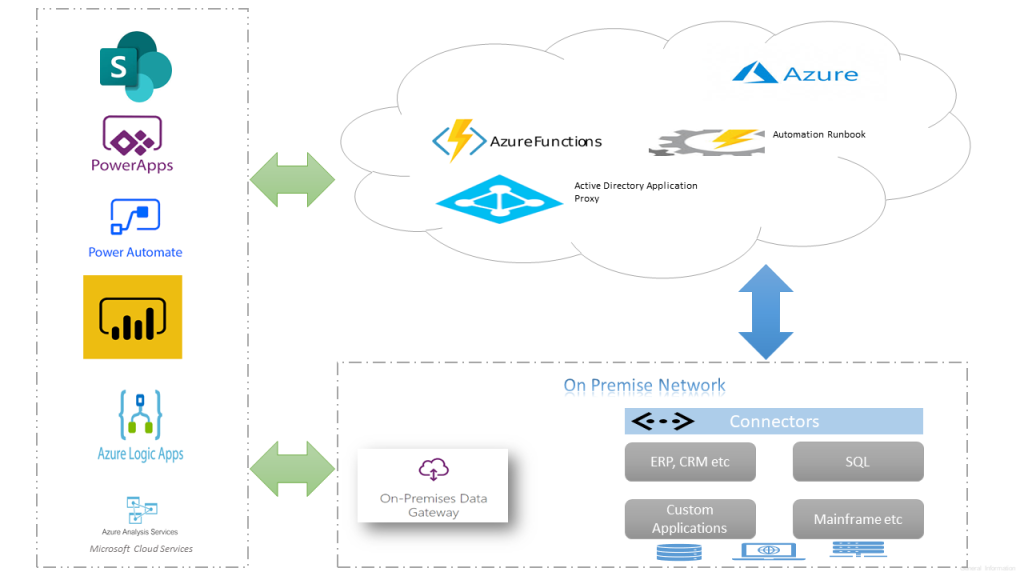

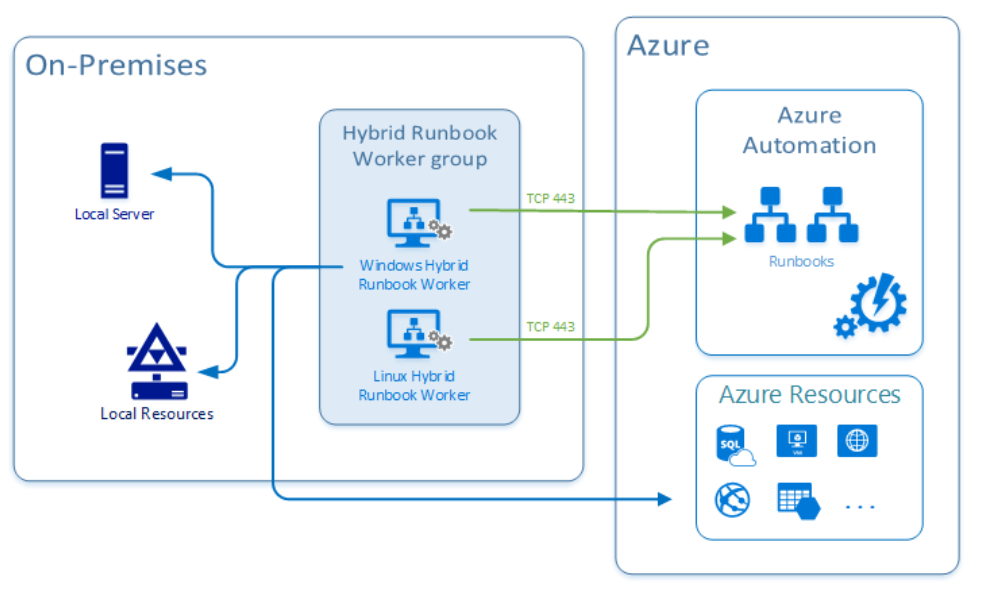

In Part 1 of the blog series on using Managed identities in Power Apps and Power Automate to access Microsoft Graph API securely, I have delved into the setup and configuration of the Azure API management service with the necessary Microsoft Graph permissions for the managed identity. Building upon that foundation, Part 2 aims to take it further your integration journey in making the API’s available as a connector in Power Apps and Power Automate secured with API key Authentication.

Azure API Management Instance: Managing API Subscription Keys

APIs published through the Azure API Management instance are by default secured by Subscription keys. These keys play a crucial role in establishing connections in Power Apps or Power Automate after exporting APIs as custom connectors.

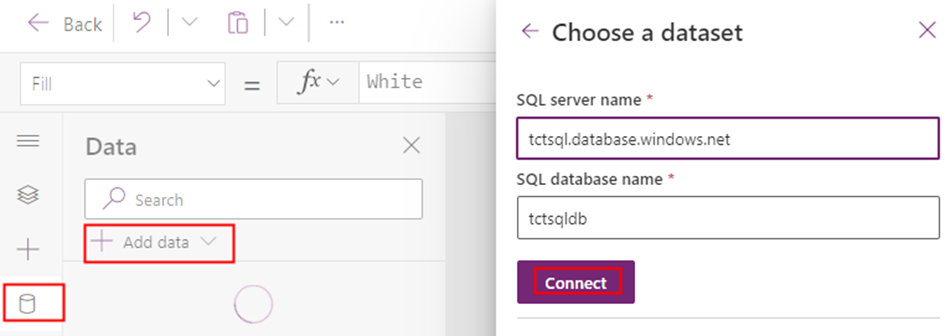

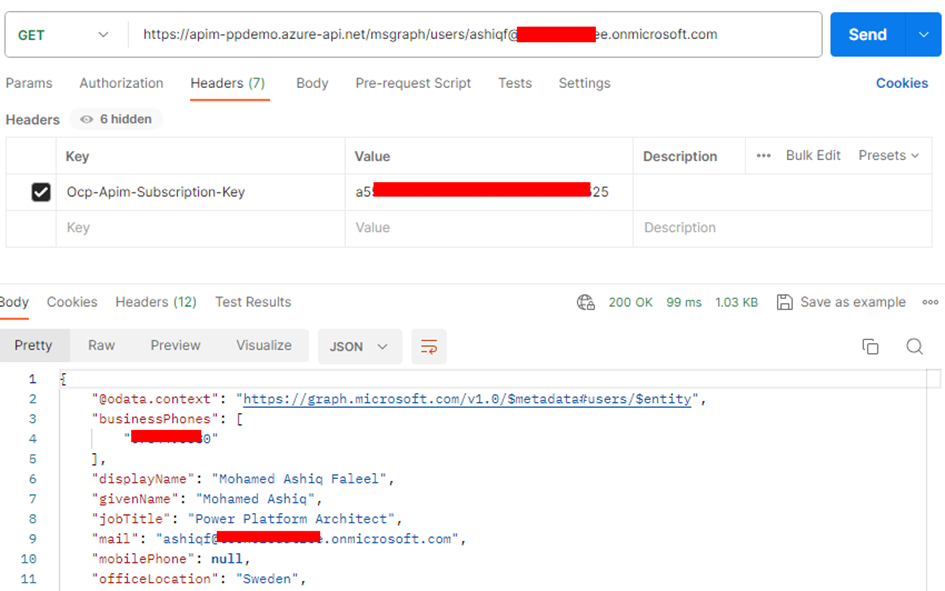

To manage these keys, navigate to the left navigation menu under the “Subscriptions” blade in the Azure portal within your API Management (APIM) instance. Here, you have the option to generate a new key or utilize an existing one. Copy the key from the portal to create the connection in the later section.

You can test the API by using the Subscription key from Postman as shown below:

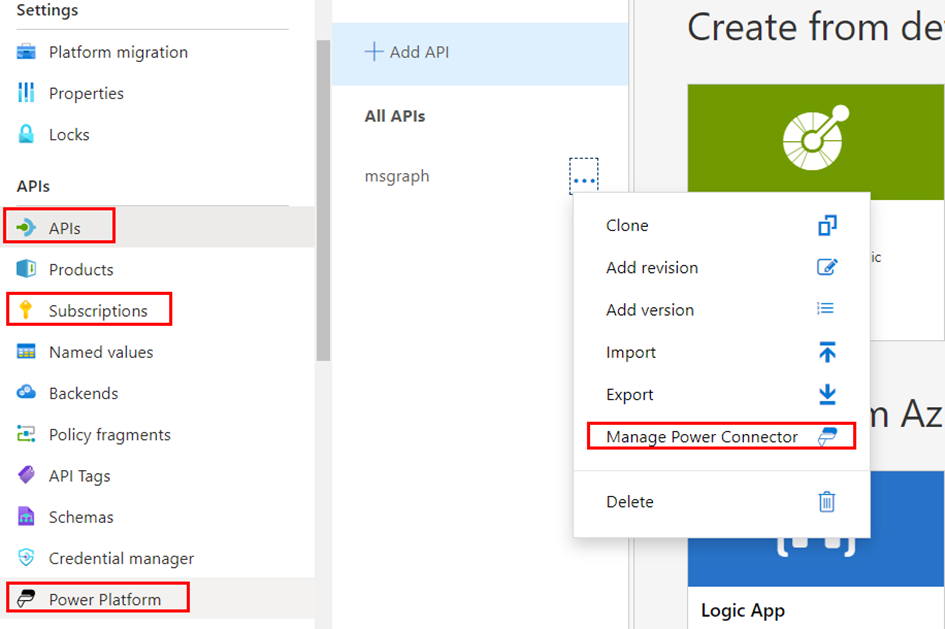

Exporting API as a Connector in Power Platform:

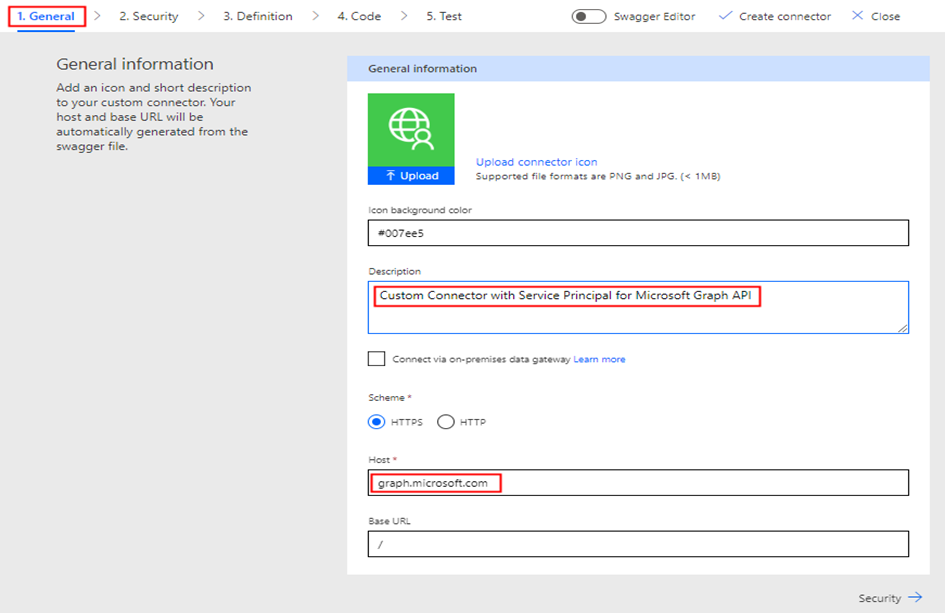

To harness the capabilities of the APIs within your API Management instance secured with the Managed identities, exporting them as connectors in Power Platform is a major step in order to be used in Power Apps and Power Automate. Follow these simple steps for a seamless integration:

In the left navigation menu, navigate to Power Platform under the APIs blade.

- Click on Create a connector to initiate the connector creation process.

- Choose the specific API (e.g., msgraph) that you wish to export as a connector.

- Select the Power Platform environment where you have Maker/Admin role access.

- Under API Display Name, enter a name for the connector. This will be the identifier for your connector within Power Platform.

- Click on the “Create” button to complete the process.

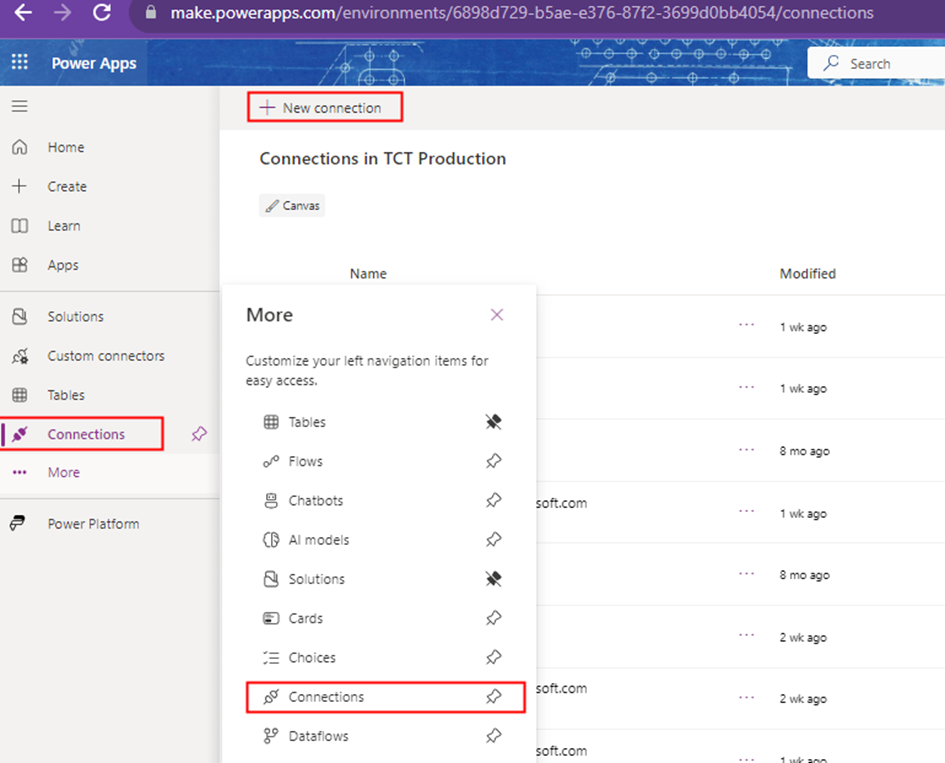

Once the connector is created, navigate to your Power Apps or Power Automate portal. You’ll see the API listed under Custom Connectors on the left navigation bar in Environment where the connector has been created from the API Management instance.

- Click on the Edit icon to initiate the analysis and testing of connector actions.

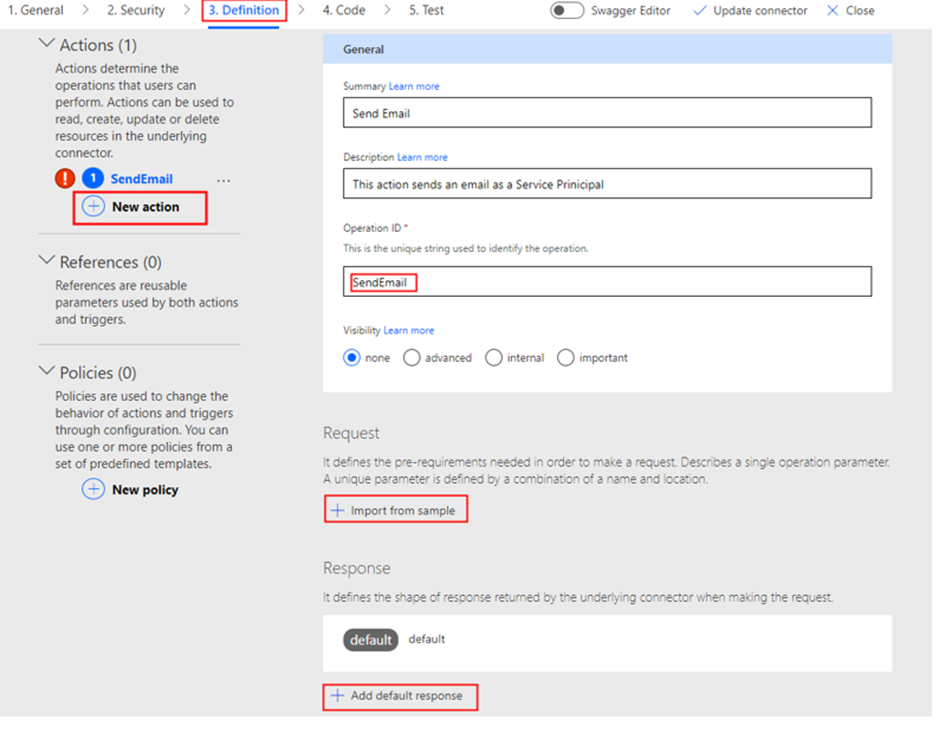

- Explore the Definition tab to view the view the API operations within the APIM instance now listed as Actions.

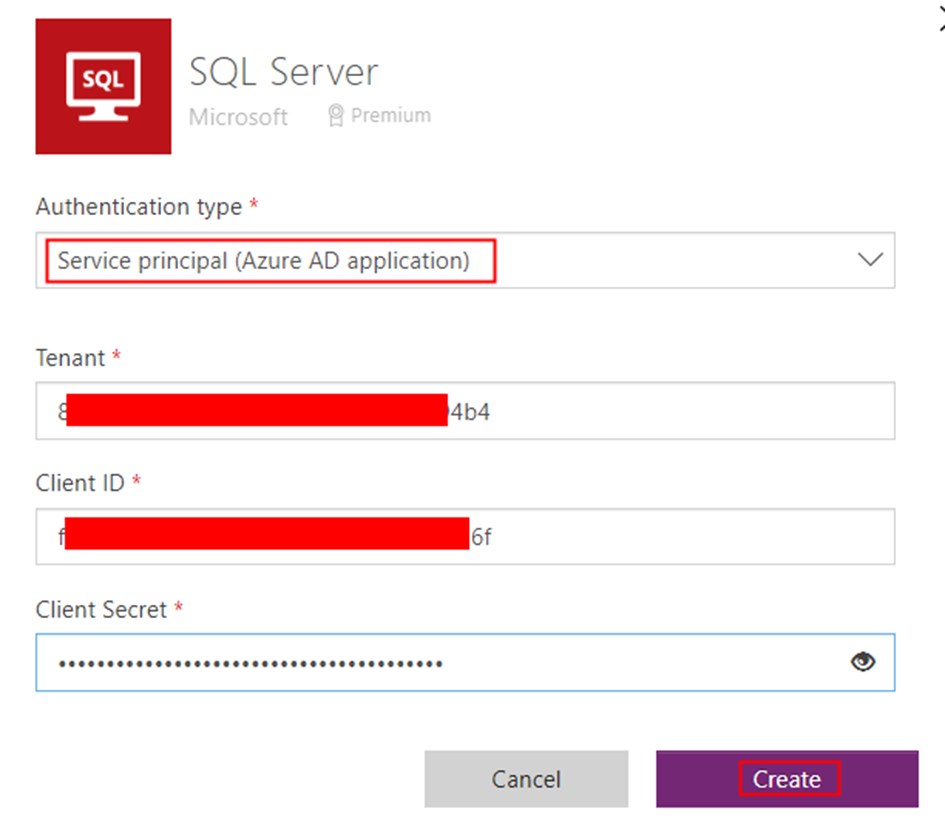

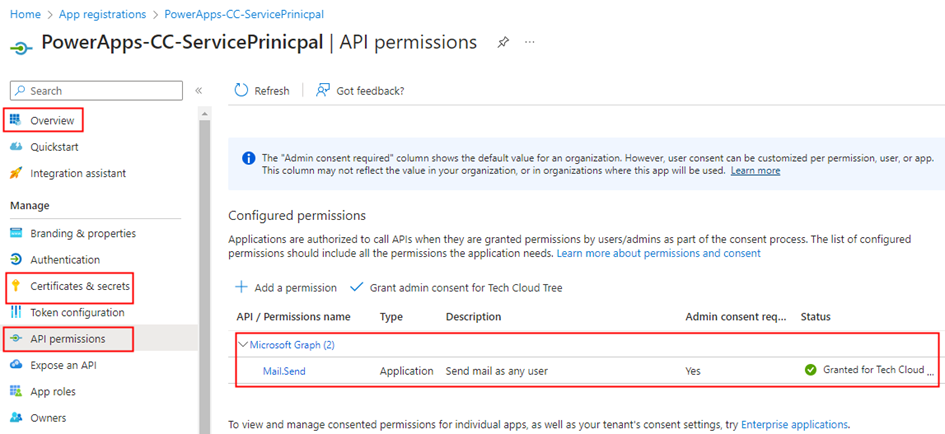

- Verify the Authentication type of the connector by navigating to the Security tab, where the setting is configured to API key for streamlined validation.

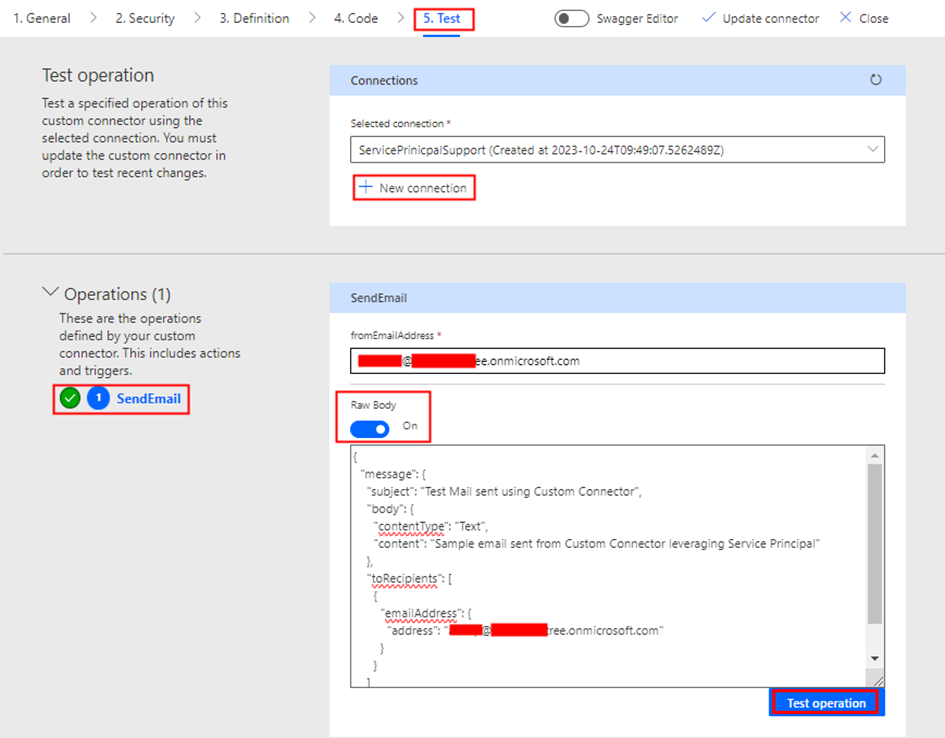

- Begin by creating a connection in the Test tab. Click on + New connection to start testing.

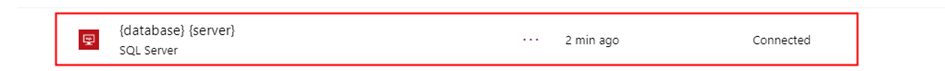

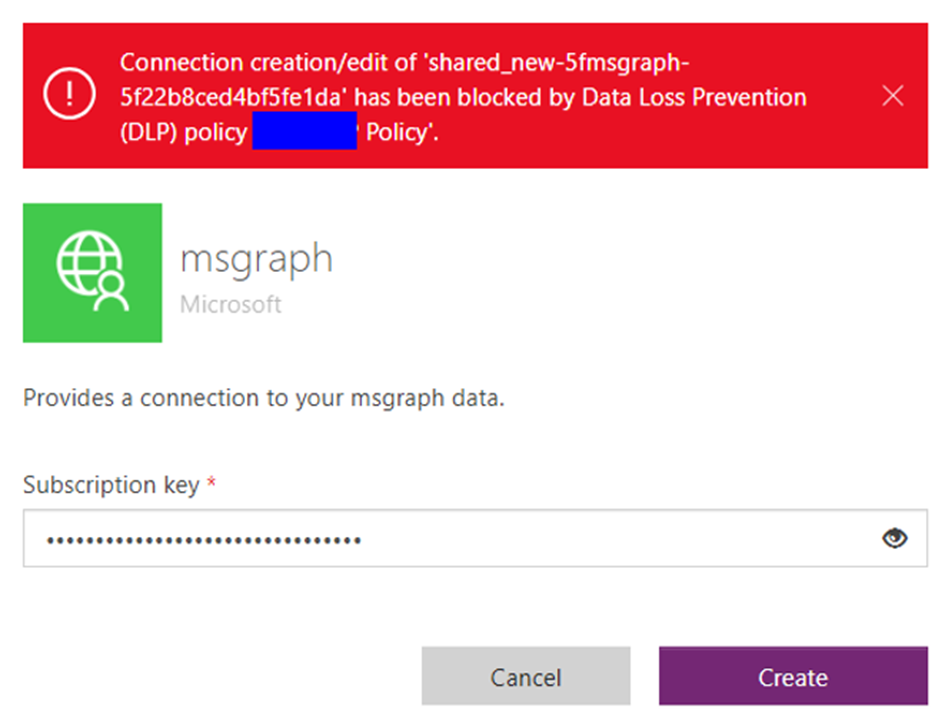

- Enter the Subscription key, which you previously copied from the Azure portal for the API Management (APIM) instance. This key establishes the secure link between your connector and the APIM services. If there is no error, the connection will be created.

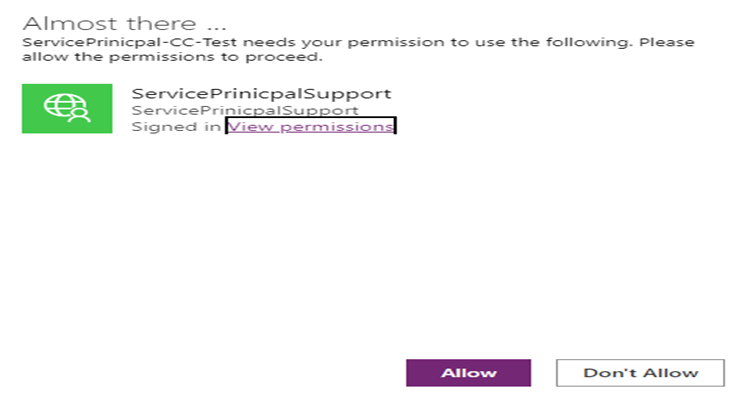

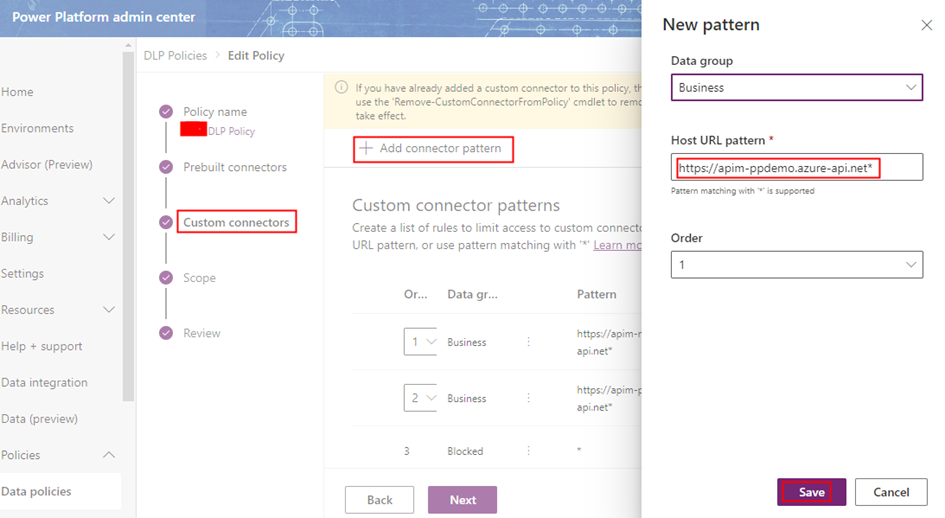

In the event of encountering below error message indicating that connection creation has been blocked by Data Loss Prevention (DLP) policy

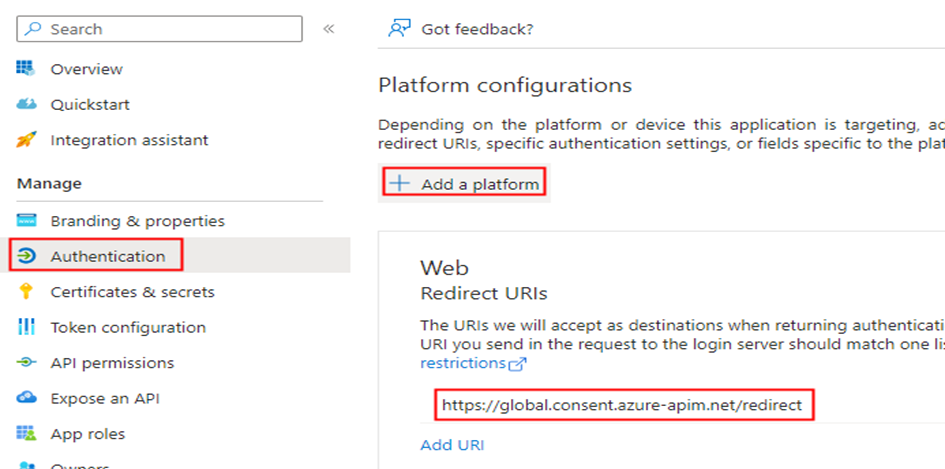

Add the Gateway URL copied from the API management instance under the Overview section on the portal as a connector pattern allowed in the Business/Non Business category of the DLP policy.

Note: Please be aware that in the API Management instance, within the APIs Policies section, if you haven’t included the wildcard (*) as I did for CORS, and have instead specified particular URLs like https://make.powerapps.com, an additional policy in the Custom connector is required to be added under the Definitions tab. Specifically, you need to add a policy to set the request Origin header.

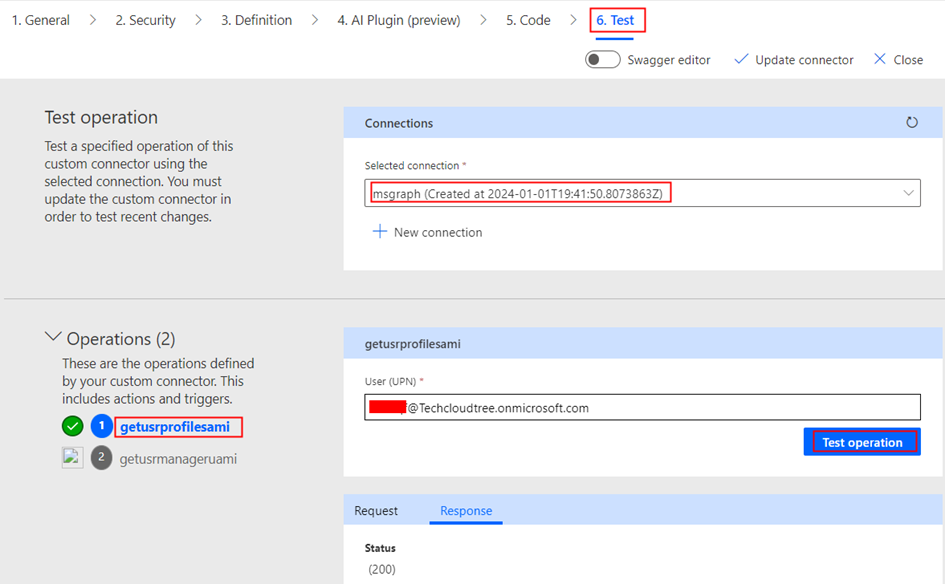

Testing the Custom Connector:

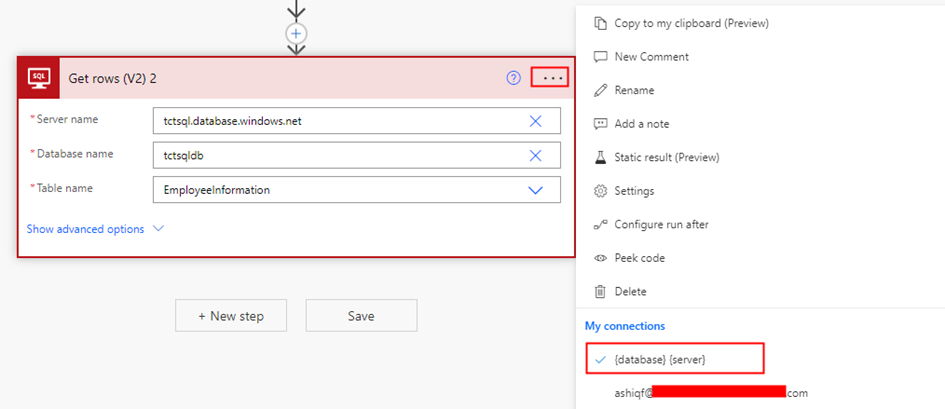

Once the connection is created, return to the edit mode of the custom connector to initiate testing of the actions. Navigate to the Test tab, where you can select the specific connection and choose the operation you wish to test. Test the operation and validate the results of the custom connector action.

Summary:

This completes the Part 2 of the blog series where we have explored the process of accessing Microsoft Graph APIs securely within the API management with Subscription key authentication using managed identities (System and User) as a connector in Power Platform. In our next article, we will delve into the enhancing security further by implementing OAuth authentication within the custom connector for API management APIs. Stay tuned. Hope you have found this informational & thanks for reading. If you are visiting my blog for the first time, please do look at my other blogposts.

Do you like this article?

Subscribe to my blog with your email address using the widget on the right side or on the bottom of this page to have new articles sent directly to your inbox the moment I publish them.