Recently I’ve came across a business case with need to automate the conversion of Outlook email messages with embedded images to PDF document. This could be done manually on Outlook client using Microsoft Print to PDF or browser Print if opened using Outlook on the Web. This process can be automated with the help of PowerAutomate trigger When a new email arrives and actions Export Email, Convert File, Create file but if an email has an embedded image or HTML content it will not work as of now. There are Third party connectors in Power Automate from Muhimbi, Plumsail which might have this functionality but I’ve not tested those yet. PowerAutomate action Export Email converts the email to .eml file.

An EML file is an email message containing the content of the message, along with the subject, sender, recipient(s), and date of the message in plain text format. Once you have the .eml file change the file extension from .eml to .txt where you can see the content. If there is any embedded image it will stored in the Base64 format. You can also change the .eml file extension to .mht and open it directly in Internet Explorer

For this blogpost I’ve used third party API service from ConvertAPI to convert Email message to PDF, they have REST API endpoints to convert Word, Excel, PowerPoint, HTML, PDF and Image formats. There is also a Free Plan with ConvertAPI where you get 1500 seconds API execution time if you sign up.

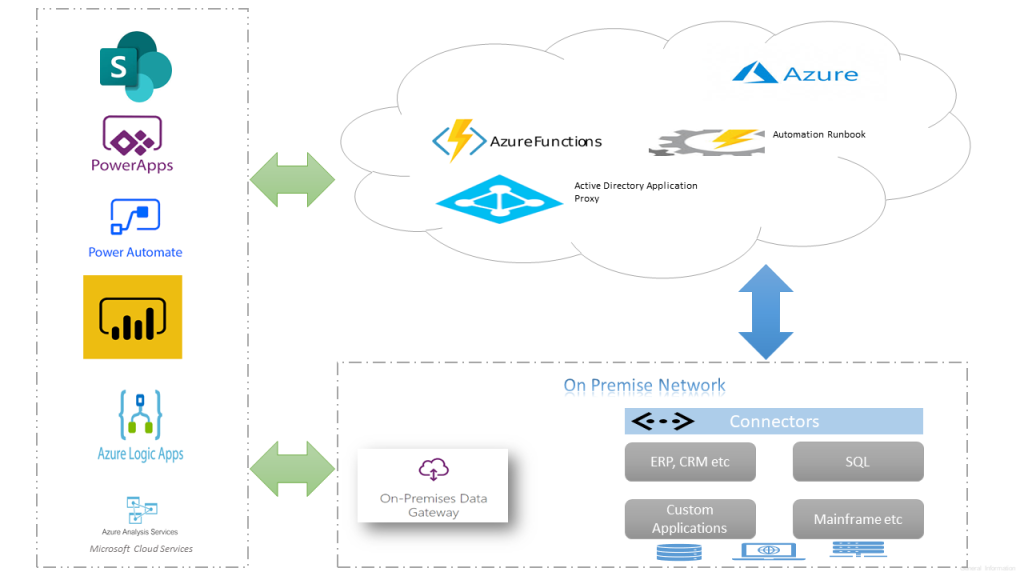

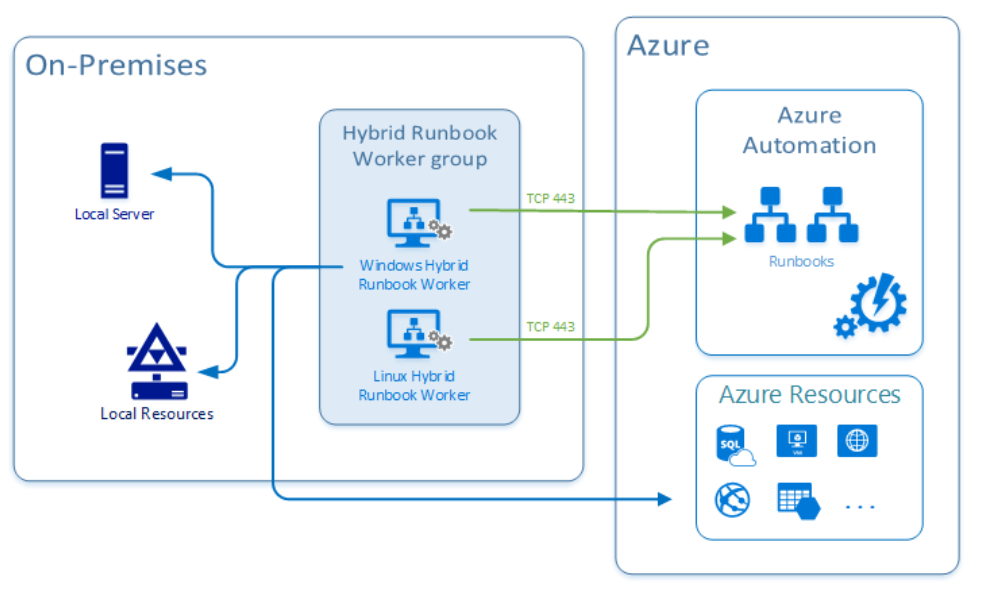

You can also create your own API service hosted in Azure for conversion with the .NET libraries like iTextSharp, GroupDocs, PDFSharp etc. Let’s go ahead & create flow to

- Convert Email to PDF – Without Embedded image

- Convert Email to PDF – With Embedded image

The above two flows packages can be downloaded from Github repo.

Convert Email to PDF – Without Embedded image:

Power Automate connector OneDrive for Business has an action Convert file (preview) converts files to different formats such as PDF, HTML, JPG etc. This connector can be used to convert a simple email with out an embedded image.

Step 1: Create a flow with Automated trigger When a new email arrives & configure the trigger parameters by clicking Show advanced options.

Step 2: Add the action Export email with Message Id from the output of the previous action. This action creates the .eml file

Step 3: Add the action Create file from the connector OneDrive for Business. Select the Folder path from your One drive, Enter the File Name for the .eml file & the File Content should be Body from the output of the action Export email (Previous). Find the screenshot below

Step 4: Add the action Convert file from the connector OneDrive for Business with Id from the output of the previous action Create File.

Step 5: Add the action Create file from the connector OneDrive for Business. This step is for storing the PDF file back to the OneDrive. Select the Folder path from your One drive to store the PDF file, Enter the File Name for the PDF file & the File Content should be File content from the output of the action Convert file. Find screenshot below

Note: The storage location I’ve chosen is Onedrive, you can choose SharePoint, Azure blob etc. Based on the need you can choose to delete the .eml files after the file conversion is done.

Convert Email to PDF – With Embedded image:

As already said the previous flow will not convert an email with embedded image as expected. Be ready with the API endpoint from ConvertAPI to convert email to PDF. The endpoint will have the secret as a query string shown as below

https://v2.convertapi.com/convert/eml/to/pdf?Secret=yoursecretkeyfromconvertapi

Note: On this flow I will be using the .eml file generated from the previous flow.

Step 1: Create a flow with Instant trigger Manually trigger a flow.

Step 2: Add the action Get file content from the connector OneDrive for Business. Select the .eml file which has the embedded image from the storage location i.e the file from OneDrive.

Step 3: Add the action Compose from the connector Data Operation. This step is to convert in to base64 representation a requirement for the convert API to work. On the Inputs file go to the expression editor and add the function base64(file content from the previous action get file) for converting .eml to base64.

Step 4: Add the action HTTP (Premium) from the connector HTTP to make a POST request to the API convert API endpoint.

Method: POST

URI: https://v2.convertapi.com/convert/eml/to/pdf?Secret=yoursecretkeyfromconvertapi

Headers:

Key: Content-Type

Value: application/json

Body: You can generate this from the ConvertAPI site by uploading a .eml file on the site. Once this data is added to the HTTP action Body parameter change the Data parameter should be the Output of the previous action Compose – Convert to Base64

{

"Parameters": [

{

"Name": "File",

"FileValue": {

"Name": "myemailfile.eml",

"Data": "@{outputs('Compose_-_Convert_to_Base64')}"

}

}

]

}

Step 5: Add the action Parse JSON from the connector Data Operation. This step is to parse the response of the HTTP POST action to the ConverAPI endpoint. You can generate the scheme by copying from the Flow run history for the HTTP action output. The schema will be look like

{

"type": "object",

"properties": {

"ConversionCost": {

"type": "integer"

},

"Files": {

"type": "array",

"items": {

"type": "object",

"properties": {

"FileName": {

"type": "string"

},

"FileExt": {

"type": "string"

},

"FileSize": {

"type": "integer"

},

"FileData": {

"type": "string"

}

},

"required": [

"FileName",

"FileExt",

"FileSize",

"FileData"

]

}

}

}

}

Step 6: Add the Compose action to convert the base64 data to binary to create the PDF from the HTTP request response. Select the filedata from the Output of the Parse JSON action which will automatically create a Apply to each since the Files is an array. Then add the following to the inputs of the of the compose action

base64toBinary(items(‘Apply_to_each’)?[‘FileData’]).

Now add the Create file action from the connector OneDrive for Business as shown below. The parameter File content should be output of the Compose action. PFB the screenshot of the flow actions

Now its time to test the flow, run the flow & check your OneDrive for the PDF file. PFB the screenshot of the PDF file with embedded image

Summary: I am not vouching to use the ConvertAPI service for converting the email to PDF. Just a sample for a use case where you get some knowledge on different actions usage & some information on the .eml file which Microsoft has used for storing email content. If its going to be heavily used or if the data is secure, then I advise you to create a REST API endpoint of your own hosted in Azure for the conversion. Hope you find this post useful & informational. Let me know if there is any comments or feedback by posting a comment below.