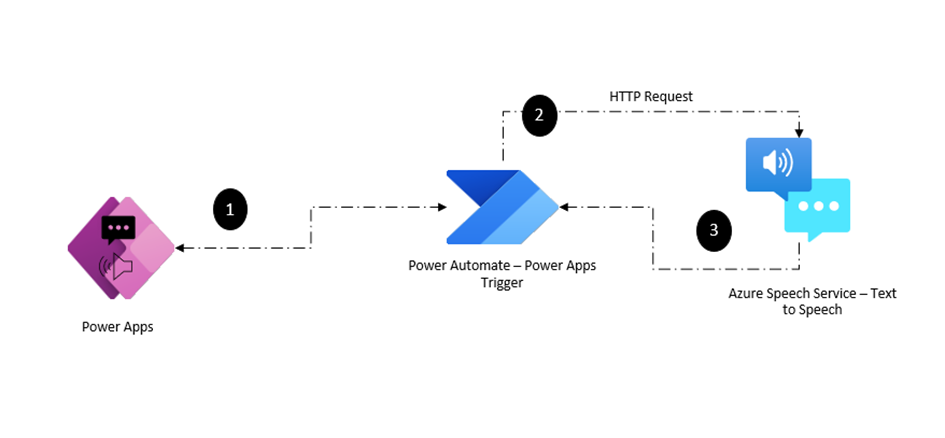

Capabilities like text-to-speech (TTS) and audio playback can take your applications to new heights of user engagement and accessibility. In this blog post, we’ll look at integrating text-to-speech and audio playback functionalities into Power Apps using Power Automate and Azure Speech Services. Whether you’re looking to provide dynamic narration, streamline communication, or enhance accessibility, this post will walk you through the steps to integrate TTS capabilities into your Power Apps projects.

Prerequisites:

Before you begin, ensure that you have the following prerequisites in place:

- Maker role in Power Platform environment

- Premium License – HTTP Connector

- Azure Subscription Access

- Azure Speech services – Text to speech

Creating Speech Services in Azure for Text to Speech:

Azure provides Speech Services that enable developers to integrate advanced speech capabilities into their applications, including Text to Speech (TTS). With Azure Speech Services, you can convert text into speech in various languages and voices.

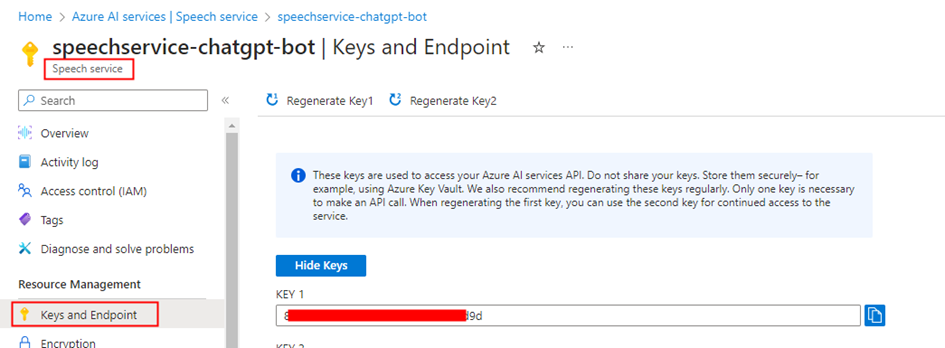

Step 1: Create the resource Speech services in the Azure Portal

Step 2: Copy the Key from the Keys and Endpoint section within the Resource Management blade. This Key is used for authentication when making requests to the Speech service APIs, enabling text-to-speech conversion in the Power Automate flow through the HTTP connector.

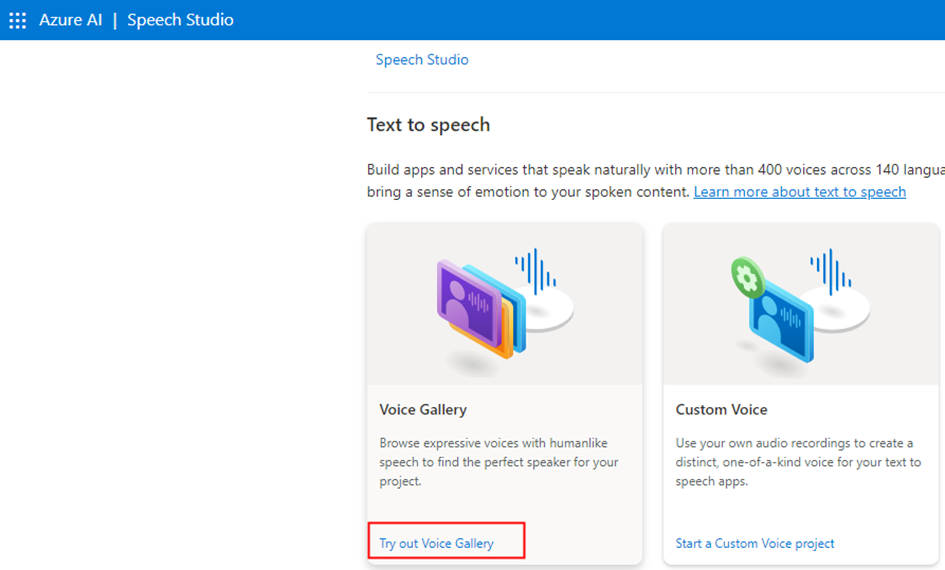

Step 3: Go to the Speech Studio to choose a voice from the gallery provided in Text to Speech section. Alternatively, you can create a custom voice using your own audio recordings. The Speech Studio can also be accessed from the Overview section of the Speech service in the Azure portal.

Power Automate Flow to convert the text to speech:

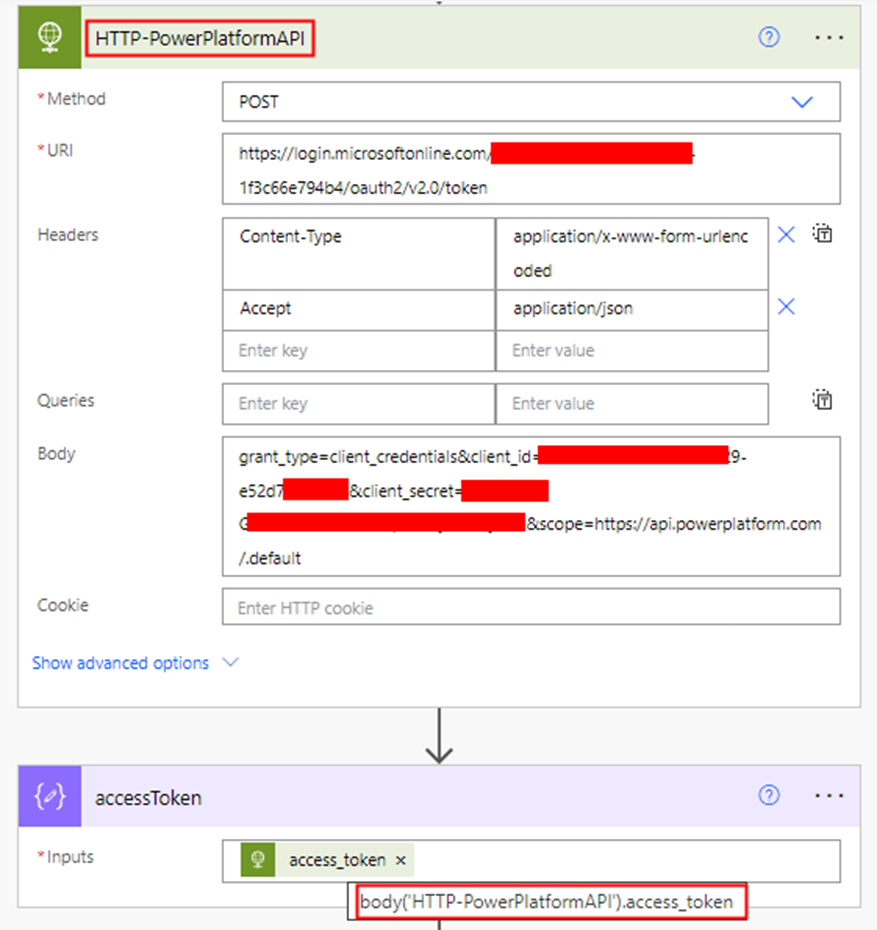

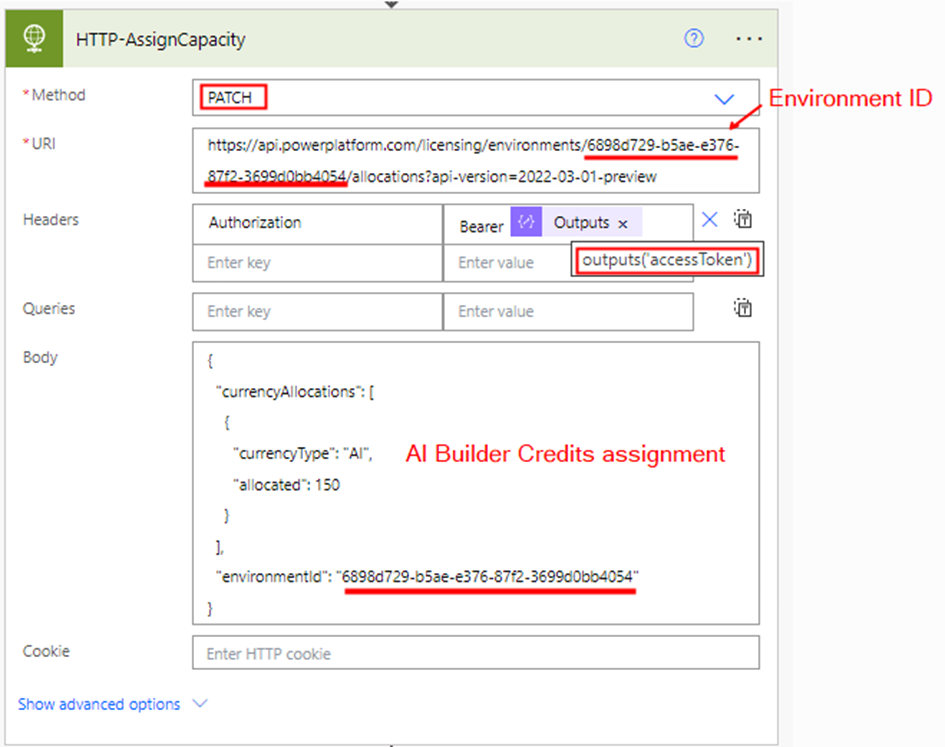

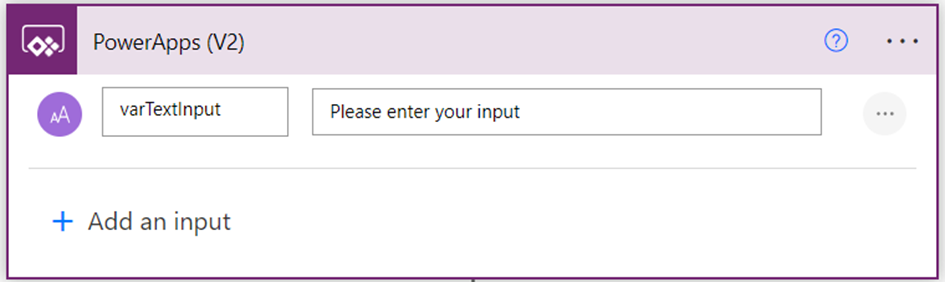

The Power Automate serves as a tool in orchestrating the integration between Power Apps and Azure Speech Services, enabling communication between the components. Create an Instant Power Automate flow with the trigger “PowerApps (V2)” either from the Power Automate portal or directly from the Power Apps maker interface. Add a text input varTextInput as shown below to send the text from the Power Apps

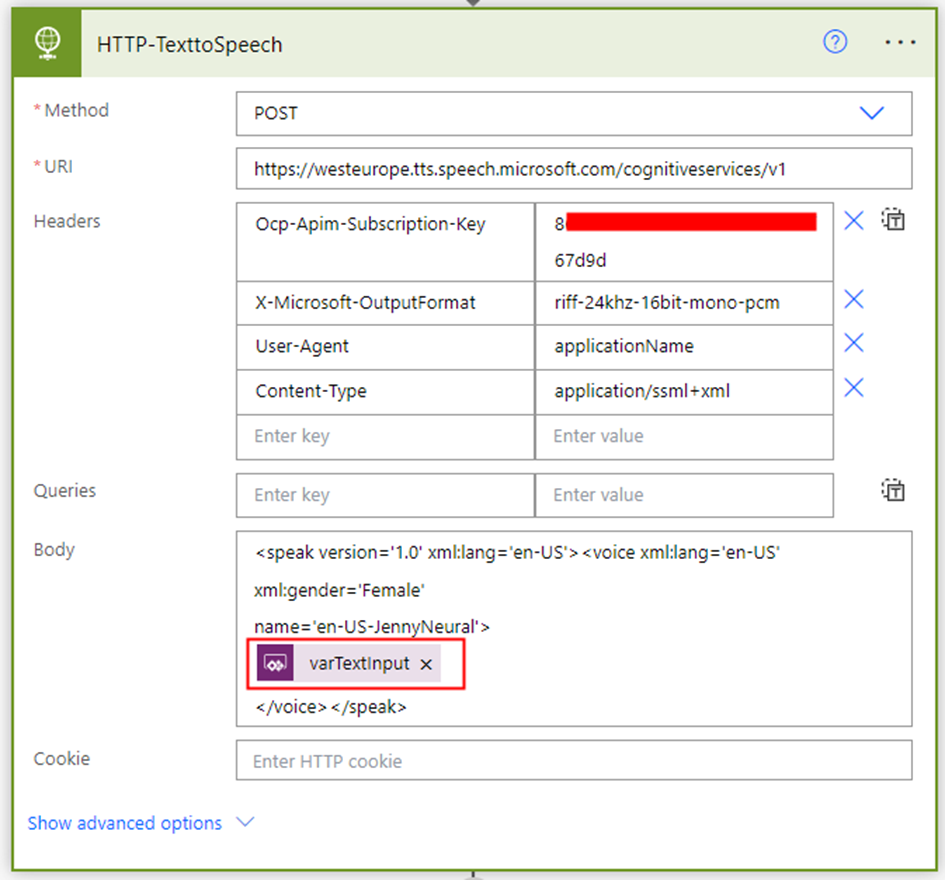

The next step involves converting the text to speech/audio utilizing the Text to Speech REST API through the HTTP connector action. Add the HTTP action with request details as below

Method: POST

URI: Depending on the region where you’ve created the Azure Speech resource, select the corresponding Rest API endpoint from the list in the Microsoft documentation. For instance, if the Speech Service resource is created in West Europe, the URL will be:

https://westeurope.tts.speech.microsoft.com/cognitiveservices/v1

Headers:

| Ocp-Apim-Subscription-Key | KeyCopiedEarlierfromtheAzureSpeechResource |

| X-Microsoft-OutputFormat | riff-24khz-16bit-mono-pcm |

| User-Agent | applicationName |

| Content-Type | application/ssml+xml |

Body:

<speak version='1.0' xml:lang='en-US'><voice xml:lang='en-US' xml:gender='Female'

name='en-US-JennyNeural'>

@{triggerBody()['text']}

</voice></speak>In the request body, add the varTextInput included to the Power Apps trigger. I have added the voice en-US-JennyNeural, you can select it from the voice gallery as discussed above.

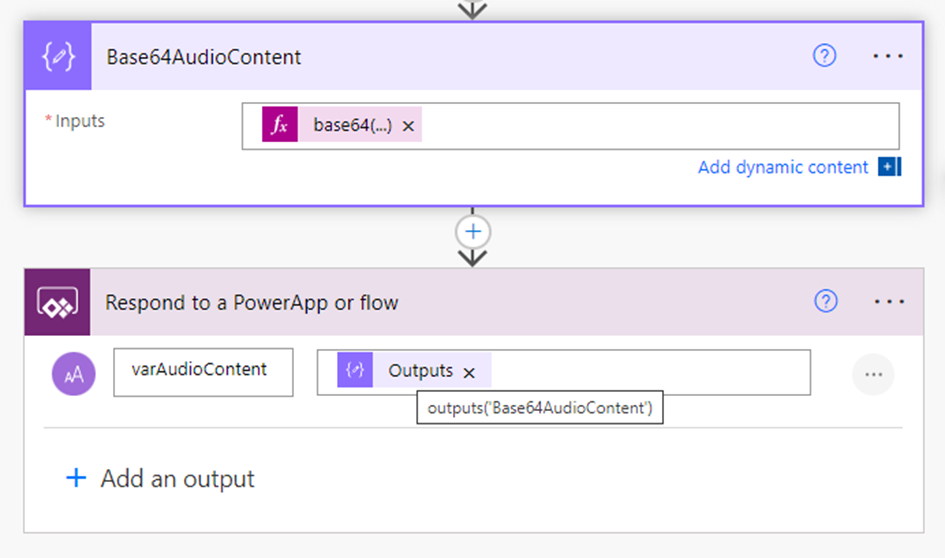

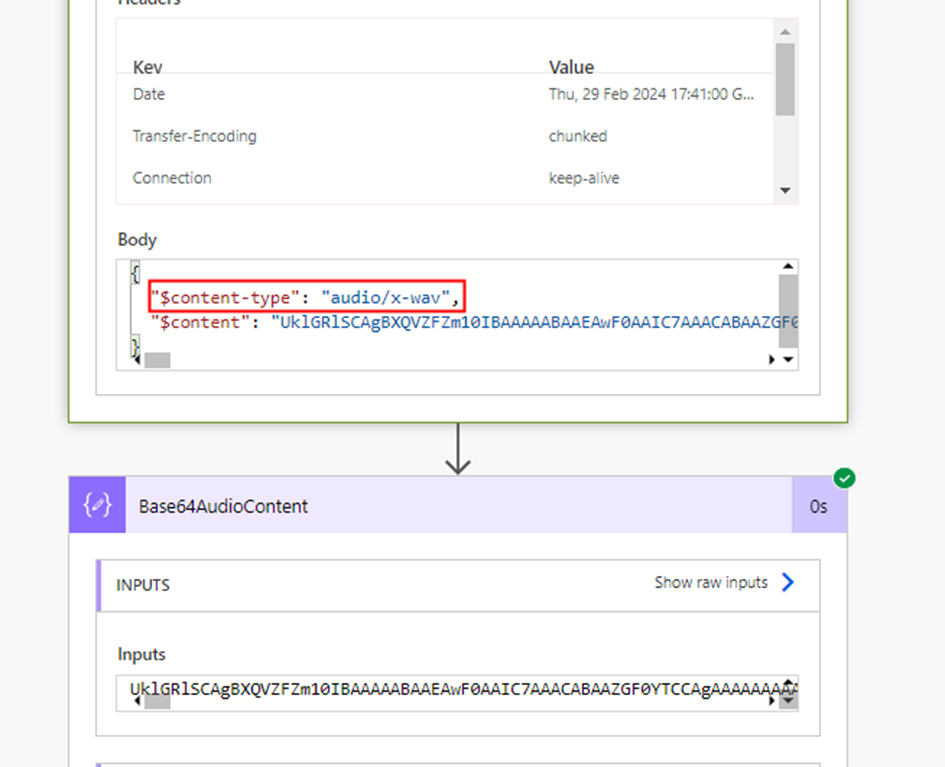

Next, add a Compose action to convert the audio generated from the HTTP action into base64 format. This will serve as the text output passed in the Respond to a PowerApp or flow action, as shown below:

Base64AudioContent compose action expression: base64(body(‘HTTP-TexttoSpeech’))

Save the flow.

Power Apps for Text Narration:

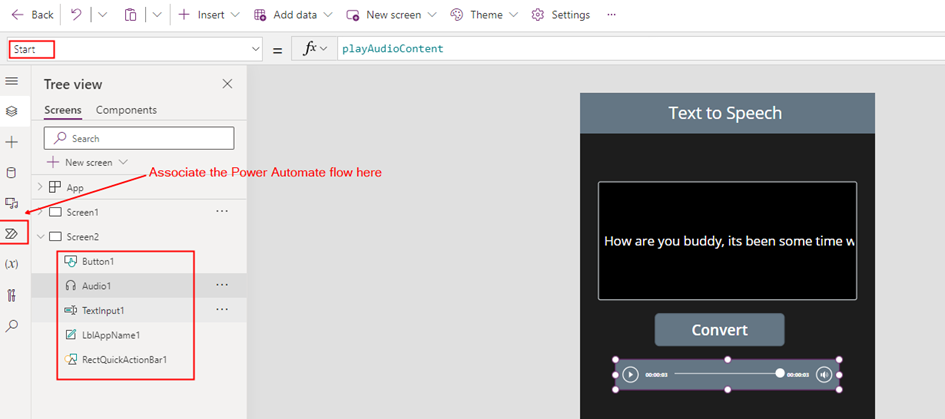

Let’s develop the app for the text narration feature, where users can input text to be converted into audio using the Power Automate flow created earlier. On the Canvas, add a Text Input control for entering the desired text, an Audio control to play the audio generated from the Azure text-to-speech service, and a button to trigger the Power Automate flow. Make sure the flow is added to the Power Apps. Add the following code to the OnSelect property of the button

// Reset the Audio1 control to its default state, clearing any previous audio.

Reset(Audio1);

// Run the TexttoSpeechFlow Power Automate flow, passing the text from TextInput1 as input.

// Store the result (converted audio) in the varconvertedAudio variable.

Set(varconvertedAudio, TexttoSpeechFlow.Run(TextInput1.Text));

// Set the playAudioContent variable to false, ensuring that any previous audio playback is stopped.

Set(playAudioContent, false);

// Set the playAudioContent variable to true, triggering playback of the newly converted audio.

Set(playAudioContent, true);The variable playAudioContent will be used in Audio control Star property to play the audio automatically

The Media property of the Audio control should have the following formula, depending on the output variable added in the ‘Respond to PowerApps or flow’ action of the Power Automate flow

"data:audio/x-wav;base64,"&varconvertedAudio.varaudiocontentThe x-wav is the format of the generated audio from the Text to Speech REST API in the Power Automate flow which can be validated from the output of the HTTP action HTTP-TexttoSpeech

You are now ready to test your app.

Summary:

By combining the power of Power Automate and Azure Speech Services developers can quickly integrate text-to-speech and audio playback functionalities into their Power Apps. Hope you have found this informational & thanks for reading. If you are visiting my blog for the first time, please do look at my other blogposts.

Do you like this article?

Subscribe to my blog with your email address using the widget on the right side or on the bottom of this page to have new articles sent directly to your inbox the moment I publish them.